Topic 1: What is Mixture-of-Experts (MoE)

we discuss the origins of MoE, why is it better than one neural network, Sparsely-Gated MoE, and sudden hype. Enjoy the collection of helpful links

Introduction

We've received great feedback on our detailed explorations of Retrieval-Augmented Generation (RAG), Transformer architecture, and Multimodal models inside the FMOps series. To keep you updated on the latest AI developments, we're launching a new series called “AI 101.” These subjects often turn into buzzwords with little clear explanation. No worries, we will provide straightforward insights into these complex areas in plain English and help you learn how to implement it.

In this edition, we focus on the Mixture-of-Experts (MoE) model – a fascinating framework that is reshaping how we build and understand scalable AI systems. Several top models are utilizing MoE, including Mistral, Databricks' DBRX, AI21 Labs' Jamba, xAI's Grok-1, and Snowflake’s Arctic. Want to learn more? Dive deep into the history, original thought, and breakthrough innovations in the MoE architecture with us.

In today’s episode, we will cover:

History – where it comes from and the initial architecture

But why is such modular architecture better than using one neural network?

MoE and Deep Learning (MoE + Conditional Computation) - what was the key innovation?

MoEs + Transformers and sudden hype

Conclusion

Bonus: Relevant resources to continue learning about MoEs

Where it comes from and the initial architecture

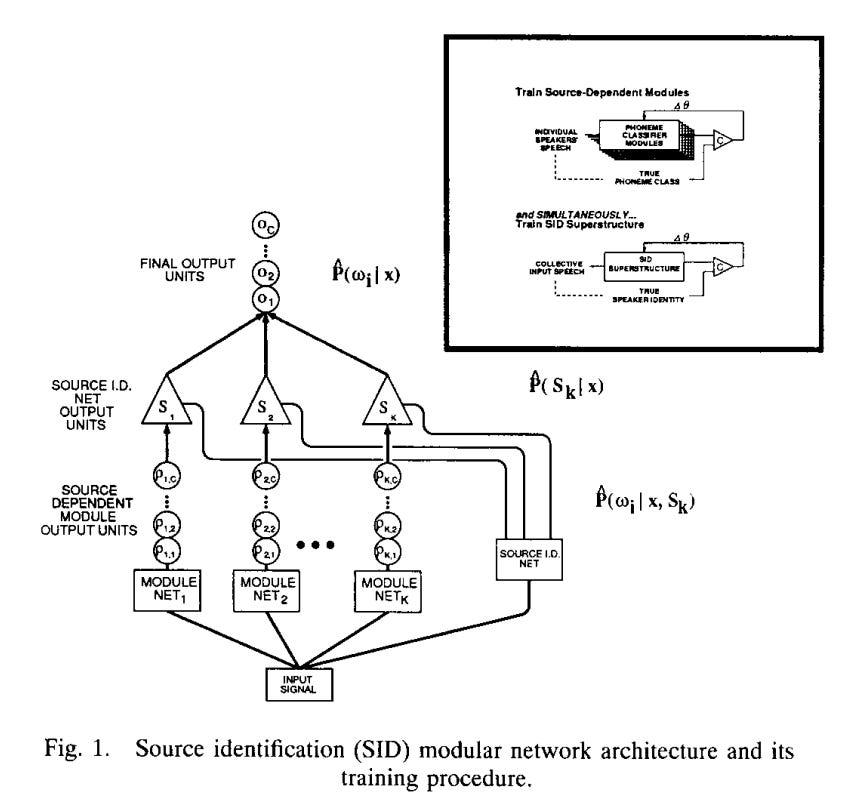

The concept of Mixture-of-Experts dates back to the 1988 Connectionist Summer School in Pittsburgh where two researchers, Robert Jacobs and Geoffrey Hinton, introduced the idea of training multiple models, referred to as "experts," using specific subsets of training data for each model. These subsets would be created by dividing the original dataset based on subtasks. As a result, each model would specialize in a dedicated subtask. During inference, a network known as "gating" would determine which expert would be used for each training case.

The idea was further explored in the first research papers but they didn’t fully realize the original efficiency envisioned by Jacobs and Hinton. In 1991, Jacobs and Hinton, along with Michael Jordan from MIT and Steven Nowlan from the University of Toronto, proposed a refinement in the “Adaptive Mixtures of Local Experts” paper, considered an originator of the modern MoE architecture.