Guest Post: Optimizing RAG Applications: Methodologies, Metrics, and Evaluation Tools by Cheney Zhang

How to Evaluate Retrieval Augmented Generation (RAG) Applications

RAG, or Retrieval Augmented Generation, is a prominent AI framework in the era of large language models (LLMs) like ChatGPT. It enhances the capabilities of these models by integrating external knowledge, ensuring more accurate and current responses. A standard RAG system includes an LLM, a vector database like Milvus, and some prompts as code.

As more and more developers and businesses adopt RAG for building GenAI applications, evaluating their effectiveness is becoming increasingly important. In another post, we evaluated the performance of two different RAG systems built with the OpenAI Assistants and the Milvus vector database, which shed some light on assessing RAG systems. This post will dive deeper and discuss the methodologies used to evaluate RAG applications. We'll also introduce some powerful evaluation tools and highlight standard metrics.

RAG evaluation metrics

Evaluating RAG applications is more than simply comparing a few examples. The key lies in using convincing, quantitative, and reproducible metrics to assess these applications. In this journey, we’ll introduce three categories of metrics:

Metrics based on the ground truth

Metrics without the ground truth

Metrics based on LLM responses

Metrics based on the ground truth

Ground truth refers to well-established answers or knowledge document chunks in a dataset corresponding to user queries. When the ground truth is the answers, we can directly compare the ground truth with the RAG responses, facilitating an end-to-end measurement using metrics like answer semantic similarity and answer correctness.

Below is an example of evaluating answers based on their correctness.

Ground truth: Einstein was born in 1879 in Germany.

High answer correctness: In 1879, in Germany, Einstein was born.

Low answer correctness: In Spain, Einstein was born in 1879.

How to generate the ground truth for your own dataset

We have now established the importance of using datasets with the ground truth for evaluating RAG applications. However, what if you want to assess a RAG application using your private datasets without annotated ground truth? How do you generate the required ground truth for your datasets?

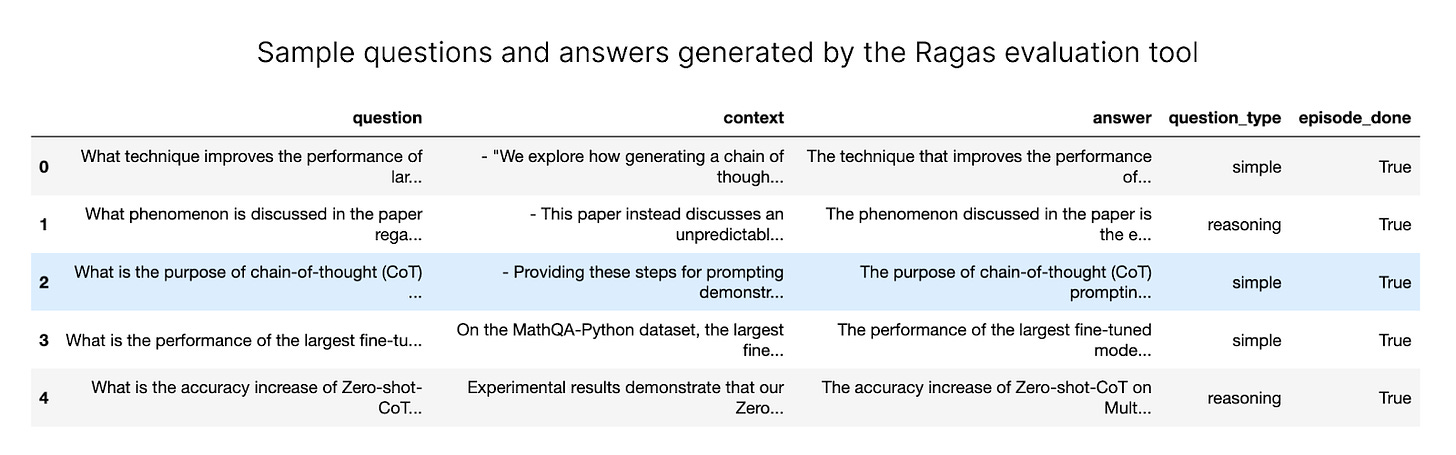

The simplest method is to ask an LLM like ChatGPT to generate sample questions and answers based on your proprietary dataset. Tools like Ragas and LlamaIndex also provide methods for generating test data tailored to your knowledge documents.

These generated test datasets, comprising questions, context, and corresponding answers, facilitate quantitative evaluation without reliance on unrelated external baseline datasets. This approach empowers users to assess RAG systems using their unique data, ensuring a more customized and meaningful evaluation process.