FOD#107: It's NOT the year of agents – it's the year when WE got used to AI

Plus 10 open-source deep research assistants and other useful stuff

A few notes about July: it’s summer slowdown

Everyone needs a break – especially at the neck-breaking speed AI is moving. Many of you asked for roundups to catch up on the deeper topics we’ve covered. So in July, we’re pressing pause on the news cycle and diving into reflection.

We’ll wrap up our Agentic Workflows series (and introduce the next one), revisit core concepts, methods, and models, and reshare some of our most insightful interviews. You’ll also get curated collections of the best books and research papers we’ve featured – plus a look back at the bold predictions our readers made at the end of 2024. Time to check who was right :)

We hope you will be able slow down with us, and absorb some AI knowledge without the release-day rush.

Topic number one: 2025 is not the year of agents

So many conversations these days circle around agents and agentic workflows.

Agents are working. Actually, no – agents are still barely capable.

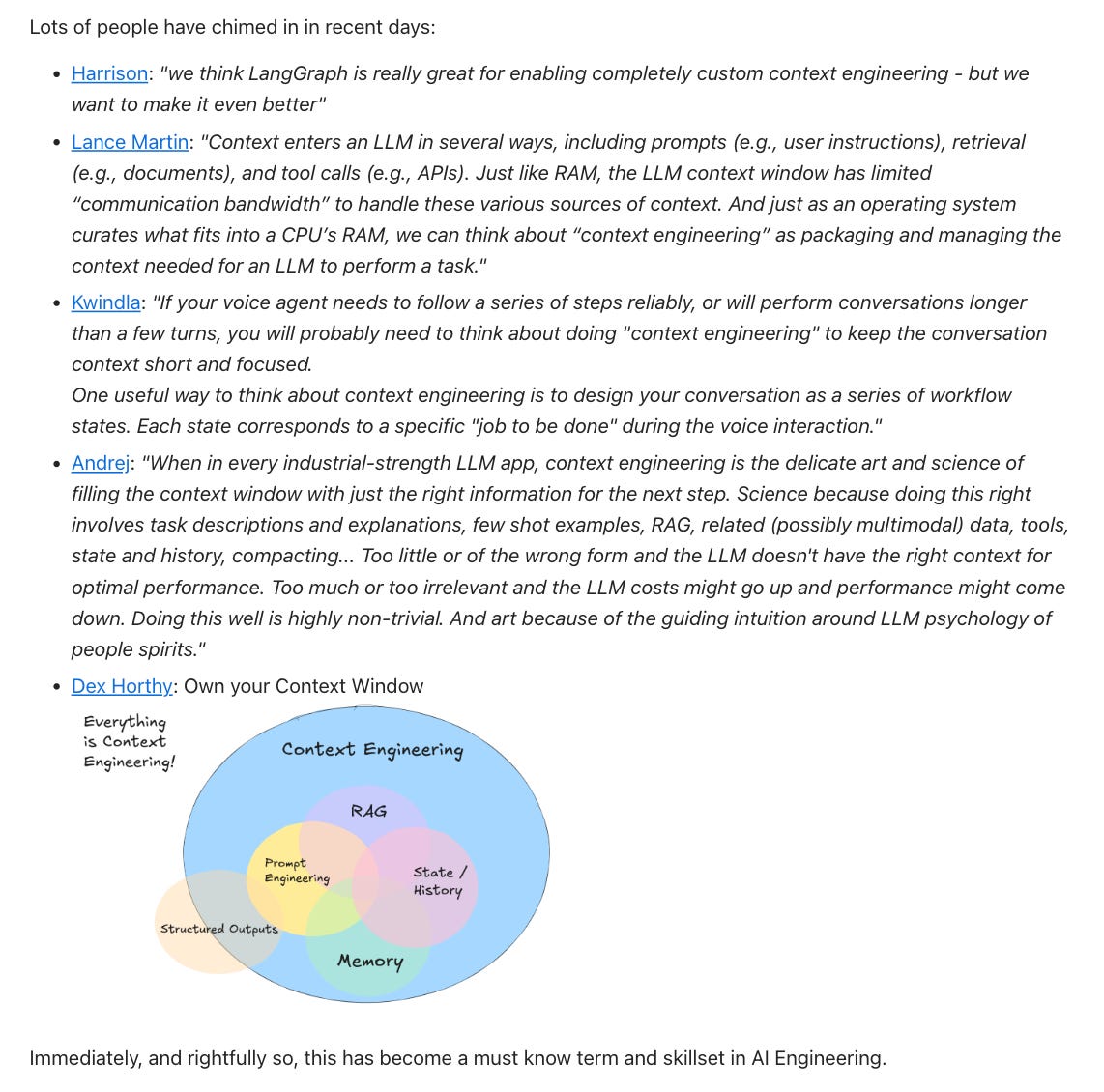

Are we vibe-coding with them, or co-coding with them? Everyone’s busy arguing whether we’re doing prompt engineering or context engineering. Hours are spent on that.

And what is our role in this loop – are we collaborators, or are we becoming callable tools for our AI? (Someone really needs to coin a term for that, like a "bot-y call", especially if it comes in the middle of the night).

The companies haven’t even agreed on what an agent is, just to name a few:

But I think that’s not what matters. 2025 is not the year of agents, we are still figuring it out.

It sounds simple, what I’m about to say – but it marks an absolutely profound shift.

2025 is the year we got used to AI.

It’s the year the magic fades into the background, becoming so essential that we forget it was ever magic at all. It’s the difference when you forgetting the screeching song of your dial-up modem and start checking your Wi-Fi speed.

Topic number two: In this video, I also provide information from two reports that support my point. Links in the description section. Watch it here

If you like it – please subscribe. I’d say, it’s refreshingly human.

Curated Collections – a super helpful list

Follow us on 🎥 YouTube Twitter Hugging Face 🤗

We are reading/watching

This is just such an awesome project, feeling grateful for this technology. Thank you, Google, and happy 20 years of Google Earth.

News from The Usual Suspects ©

Laude Institute bets big on research that ships

Launched with a $100M personal bet by Andy Konwinski (Apache Spark, Databricks), the Laude Institute aims to close the gap between lab research and real-world impact. A nonprofit with a public benefit arm, Laude is backing both early “Slingshots” like Terminal-Bench and long-horizon “Moonshots” for AI in science, society, and public goods. Board includes Dave Patterson, Jeff Dean, Joelle Pineau, and a who’s who of computing. Mission: accelerate responsible, applied breakthroughs – fast.

OpenAI feels the burn

After Meta lured away eight senior researchers – reportedly dangling sky-high bonuses – OpenAI’s leadership is scrambling to stop the talent bleed. Chief Research Officer Mark Chen called it a “visceral” loss, likening it to a break-in. OpenAI is now “recalibrating comp” and rethinking how to keep its stars from jumping ship. The company is also, apparently, taking a one-week company-wide shutdown to let burned-out staff recharge – except leadership, who are staying on to fend off Meta’s aggressive talent raid. Madness.

Runway hits ‘Play’ on gaming

After cutting its teeth in Hollywood, Runway is bringing its generative AI toolkit to the gaming world. The $3B startup is launching a consumer-facing product to generate games via simple chat prompts, with more advanced tools promised by year’s end. CEO Cristóbal Valenzuela says devs are embracing AI faster than filmmakers did – and no, he’s not selling to Zuckerberg (yet).

Google introduced so many things last week:

Doppl, lets you virtually try on outfits using photos or screenshots. Built on Google Shopping’s AI try-on tech, Doppl animates your fashion choices with personalized videos. Don’t expect a perfect fit – but it is pretty accurate.

Ask Photos and You Shall Retrieve – their AI-powered search tool lets users query their image libraries with natural language. Now it responds faster to simple prompts – like “dogs” – while still flexing its Gemini-powered muscles on deeper asks.

Gemini CLI – an open-source AI agent that brings Gemini 2.5 Pro directly to developers’ terminals – free of charge (basically). With a 1M-token context window and unmatched usage limits (60 requests/min, 1,000/day), it’s a quiet power move. Some devs complain it doesn’t work properly though (yet).

YouTube – Your Binge Buddy. It is turning search into more of a guided tour with AI-powered carousels – curated clips, creator insights, and smart summaries for queries like “best beaches in Hawaii.” Meanwhile, its conversational AI feature, once Premium-only, is expanding to more U.S. users.

more in Models →

Models to pay attention to:

Introducing Gemma 3n: The developer guide

Researchers from Google introduced Gemma 3n, an on-device, multimodal LLM optimized for mobile hardware. It supports text, image, audio, and video inputs with outputs in text. Two models – E2B (2GB VRAM) and E4B (3GB VRAM) – achieve 2x-13x performance gains using MatFormer, PLE, and KV Cache Sharing. The E4B scores over 1300 in LMArena. Audio support includes speech-to-text/translation; MobileNet-V5 vision encoder runs 60 FPS on Pixel, outperforming SoViT with 46% fewer parameters. Open-sourced →read the paper

Hunyuan A13B

Researchers from Tencent introduced Hunyuan A13B, a 13-billion parameter LLM trained on 3.2 trillion tokens. It achieves 82.7% on MMLU, 73.5% on CMMLU, 36.6% on GSM8K, and 61.7% on HumanEval. The model outperforms LLaMA 3 8B and Qwen 1.5 14B on 10 of 13 benchmarks. It supports 27 programming languages, multilingual instruction following, and demonstrates strong reasoning and code synthesis. Hunyuan A13B is open-sourced under a permissive license →read the report

Lfm-1b-math: Can small models be concise reasoners?

Researchers from Liquid AI enhanced a 1.3B parameter chat model, LFM-1.3B, into LFM-1.3B-Math using 4.5M supervised samples and RL. Fine-tuning improved performance from 14.08% to 59.87% on reasoning benchmarks, while GRPO-based RL reduced verbosity and raised constrained (4k token) accuracy from 30.09% to 46.98%. Despite no math-specific pretraining, the model matched or outperformed specialized peers, making it ideal for edge deployment with tight compute and memory budgets →read the paper

AlphaGenome: AI for better understanding the genome

Researchers from Google DeepMind developed AlphaGenome, an AI model that predicts how DNA variants affect gene regulation across cell types. It processes sequences up to 1 million base pairs, outputs base-level predictions, and scores variant effects in seconds. Trained on ENCODE, GTEx, FANTOM5, and 4D Nucleome data, it outperformed existing models in 22/24 single-sequence tasks and 24/26 variant-effect benchmarks. It uniquely models splice junctions and enables comprehensive multimodal genomic analysis →read the paper

Gemini Robotics On-Device brings AI to local robotic devices

Researchers from Google DeepMind introduced Gemini Robotics On-Device, a compact VLA model for bi-arm robots capable of natural language instruction following and dexterous tasks like folding clothes or assembling belts. Running fully offline, it adapts to new tasks with 50–100 demonstrations and outperforms prior on-device models in generalization and instruction-following benchmarks. It was successfully fine-tuned to different robots like Franka FR3 and Apptronik’s Apollo, demonstrating strong embodiment generalization and fast adaptation capabilities →read the paperMercury, a General Chat Diffusion Large Language Model

Researchers from Inception Labs released Mercury, a diffusion-based LLM delivering 7x faster throughput than GPT-4.1 Nano, achieving 708 tokens/sec. Mercury matches or exceeds performance on key benchmarks: 85% on HumanEval, 83% on MATH-500, and 30% on AIME 2024. Despite running on standard NVIDIA GPUs, it achieves lower real-world voice latency than Llama 3.3 70B on Cerebras. Mercury powers Microsoft’s NLWeb for fast, grounded, hallucination-free natural language interactions →read the paper

Recommended dataset and benchmark

FineWeb2: One Pipeline to Scale Them All (by Hugging Face) builds a multilingual web-scale dataset pipeline adaptable to over 1,000 languages with language-specific filtering and rebalancing ->read the paper